LLM-Based Email Agent to Minimize Payment Delays

How an email parsing assistant trained to detect payment-related problems achieved a 90% acceptance rate, reduced customer suspensions by 2%, and delivered major workflow improvements.

Tesorio, Accounts Receivable

Product Strategy • Research • Design • GenAI

90% of payment communication is by email, so things get missed

Corporate collections teams are lean with heavy case loads, and over 90% of communication is handled through email. Most late payments stem from data and clerical issues—not refusal to pay—but discovering these issues can be a challenge for collectors who have to balance time managing email with monitoring customer invoices.

The proposal was to use LLM-driven email parsing to detect payment issues in incoming messages. How -- and where -- to bring these issues to the collector's attention was the question.

Is this an email problem, or an information problem?

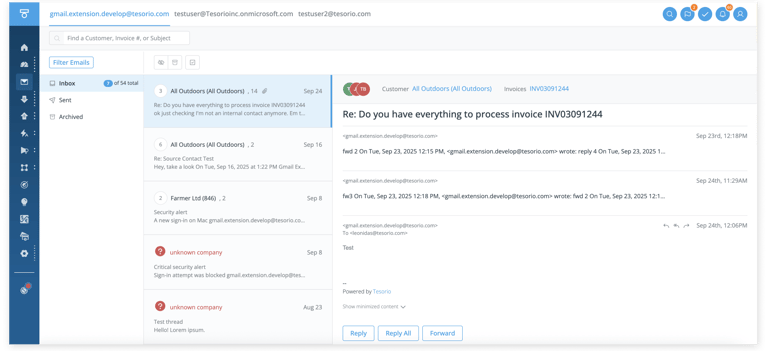

The initial proposal was to incorporate issue parsing into the application's Inbox module (your Gmail or Outlook plumbed directly into the system), in part to make that feature more attractive. I had a few concerns about this approach: (1) there were long-standing, technical issues that would not be resolved as part of this work; (2) the Inbox used a different code base than email features in the rest of the application.

My hunch, based on other research, was that email parsing would be more valuable and extensible if we integrated it directly into invoice records (which also included email capabilities). Additionally, if we were using parsing to extract "meaning" about the state of the invoice, we were moving the workflow forward from email-focused to information- (and action) focused.

If AI is a possible solution, what are expectations for performance?

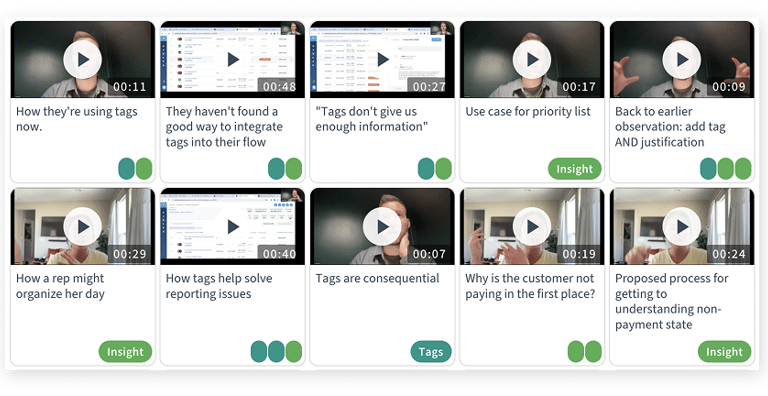

We already had a wealth of data regarding how users worked with email in the system from earlier research I had conducted. So, for this initiative conversations with users focused on perceptions and expectations regarding AI. The entire team participated in the interview sessions, including our two data scientists.

Earlier research regarding email in the system and goals for automation enabled the team to tighten the focus of the discovery sessions on AI-specific topics.

User conversations focused on perceptions and expectations regarding AI.

"As good as a warm body", it turns out

• It needs to learn

Problem Statement

"Help collectors recognize and prioritize invoice issues detected in email as early as possible so they can be resolved before the payment goes late."

Success = at least 55% positive action

Users expected AI to perform as well as an "intern". They had some specific criteria for the technology to be worth their time and deliver net positive value:

• It needs to be right at least 50% of the time

• It needs to be auditable (show its work)

Tuning the machine to recognize issues

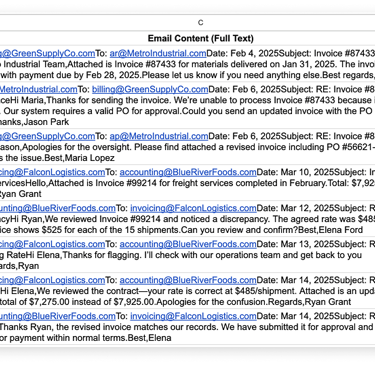

An unexpected--but surprisingly interesting--part of the project was working with one of the data scientists to train the LLM. He would compile large sets of emails with multiple threads and I would review whether the machine made the right call.

Spending time deep in the sprawling, convoluted threads gave me even more sympathy for collectors and their attempt to manually manage this work. I also came away amazed by the AI's level of accuracy and a firm conviction that this feature was long overdue.

Serving insights in context

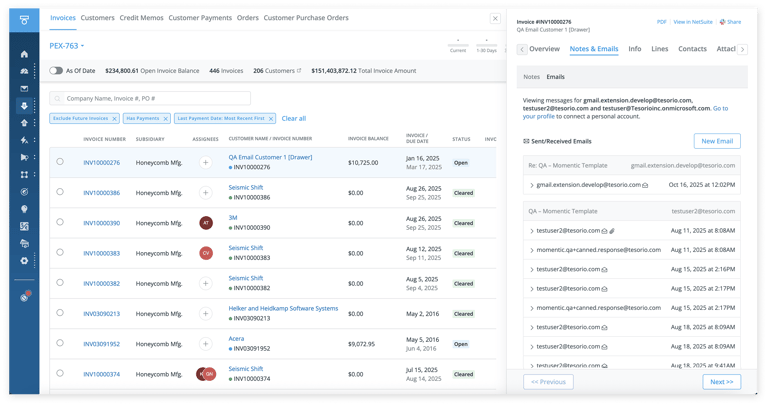

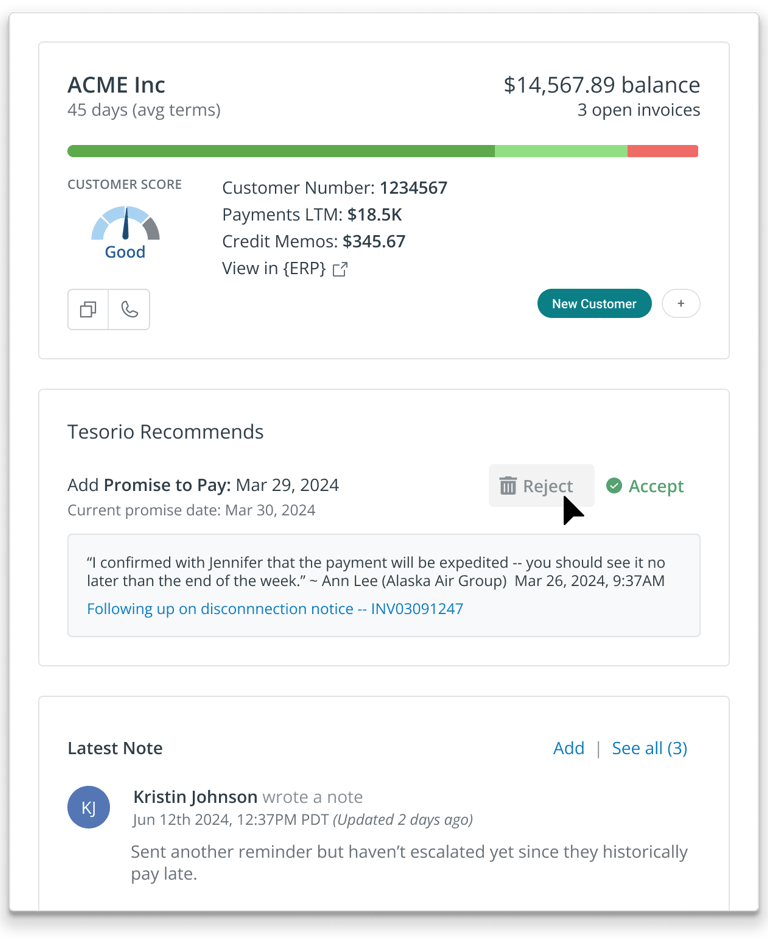

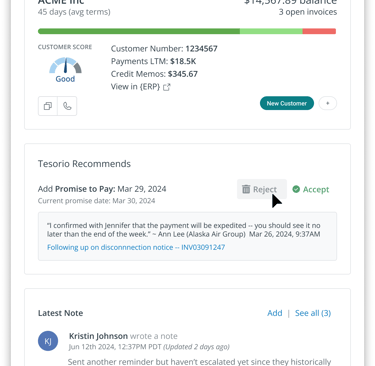

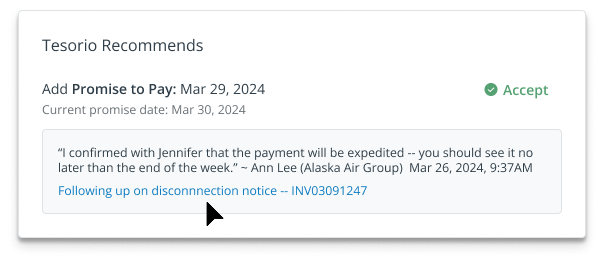

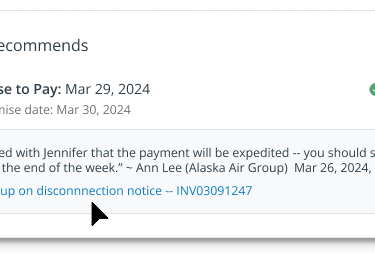

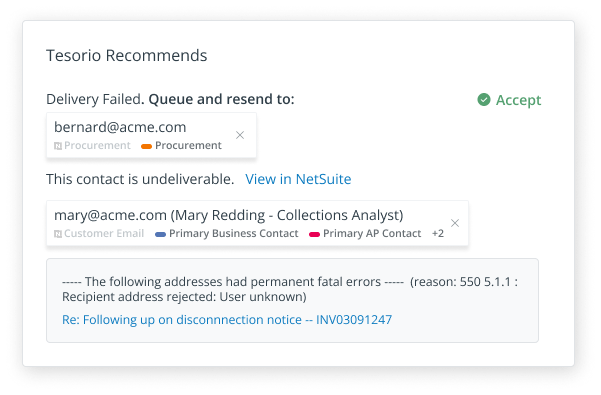

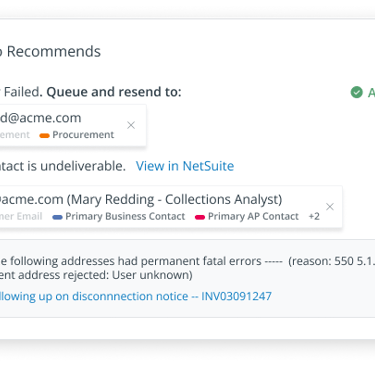

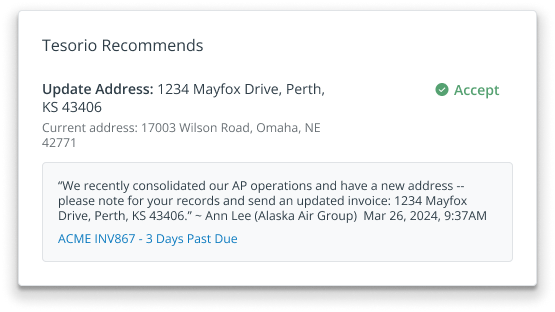

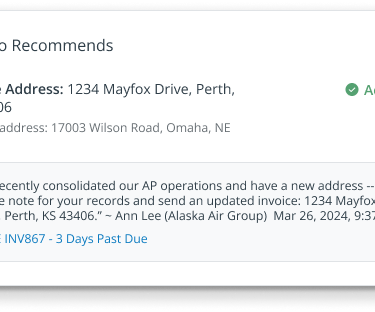

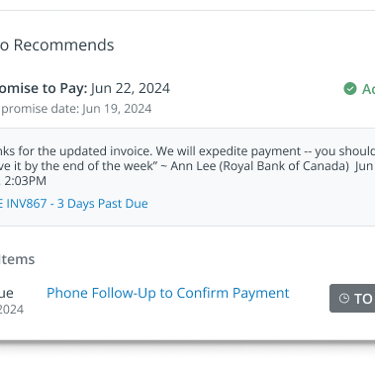

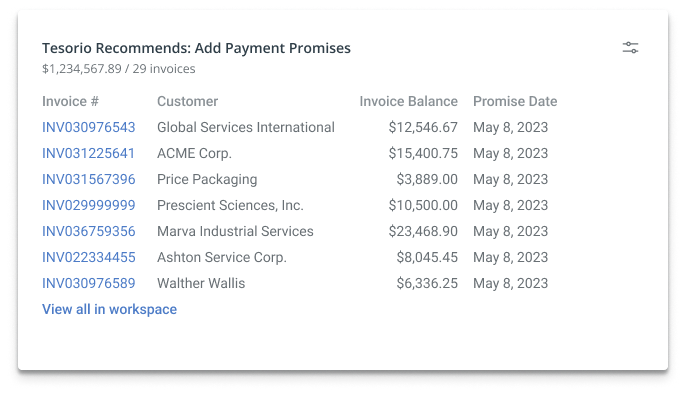

To the extent possible, I wanted to pull users out of their Inbox and keep them focused and in context. My design integrated the recommendations agent directly into the invoice record, where collectors spend the majority of their time.

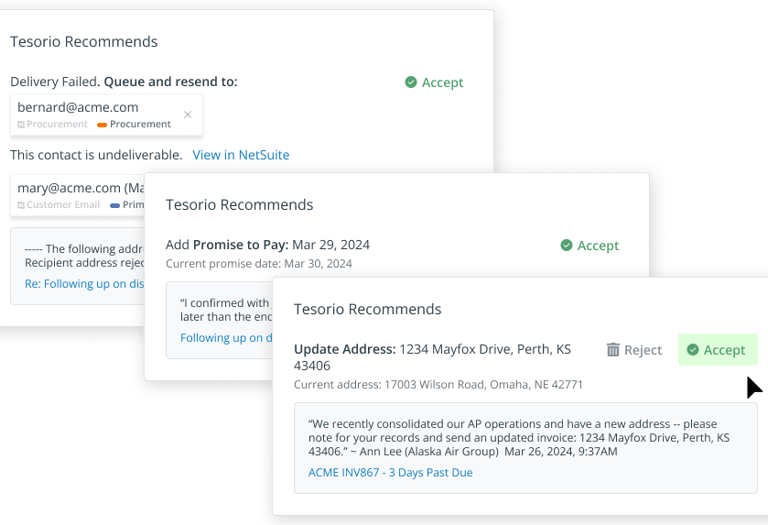

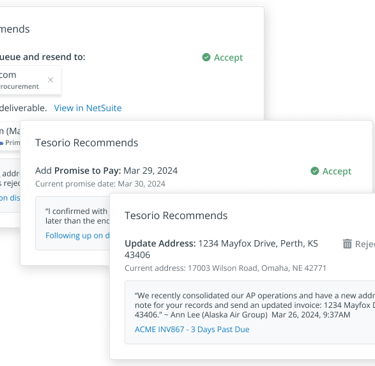

We used an Accept/Reject paradigm for taking action. The Reject action was important for two reasons: (1) it was the mechanism that removed a bad recommendation from view; (2) the rejected recommendations became our primary data source for refining the recommendations prompt. This refinement process increased overall performance about 10%.

Recommendations were integrated directly into the invoice record.

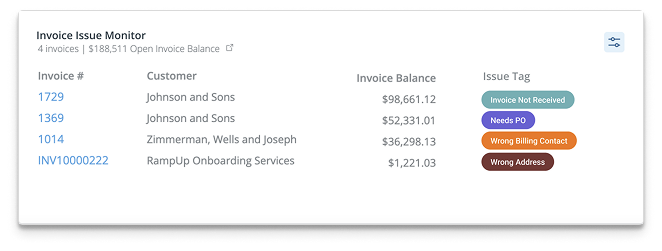

Designing for trust and showing our work

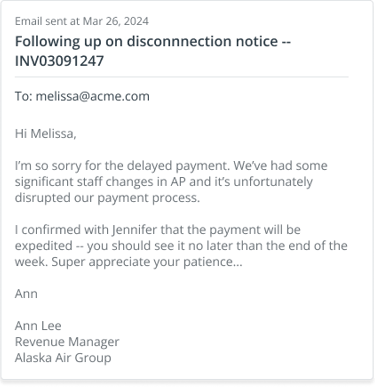

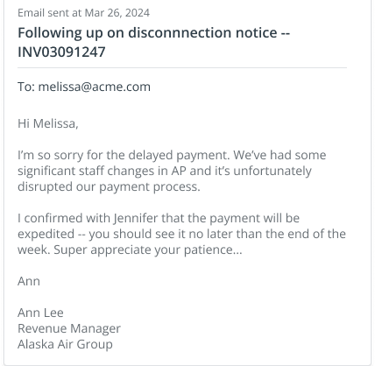

A common theme during discovery was understanding how the agent was making recommendations. People were very willing to adopt AI-based processes but the system had to prove itself first. To that end, the design provided the minimum amount of information we thought was necessary for the user to see without having to interact with the page to make a decision. We then provided access to the full thread on hover using an existing pattern.

The design included the minimum amount of information necessary for the user to make a decision without having to interact with the page. The full email thread was available on hover if more context was needed.

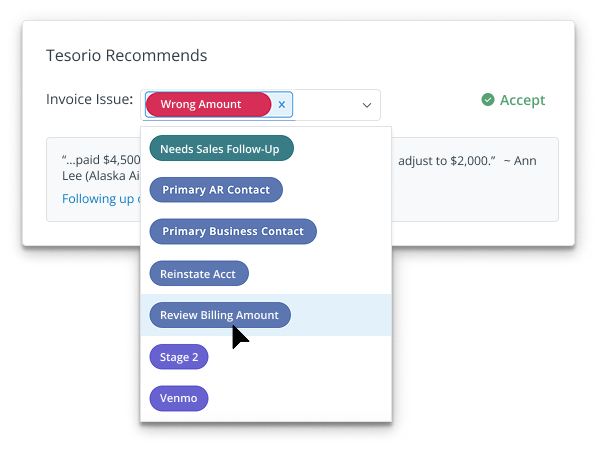

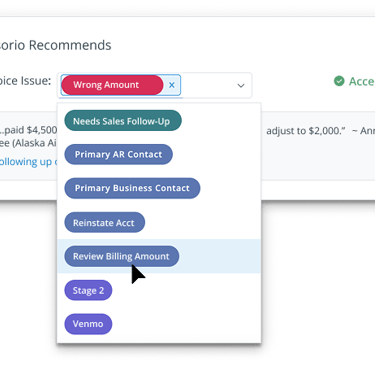

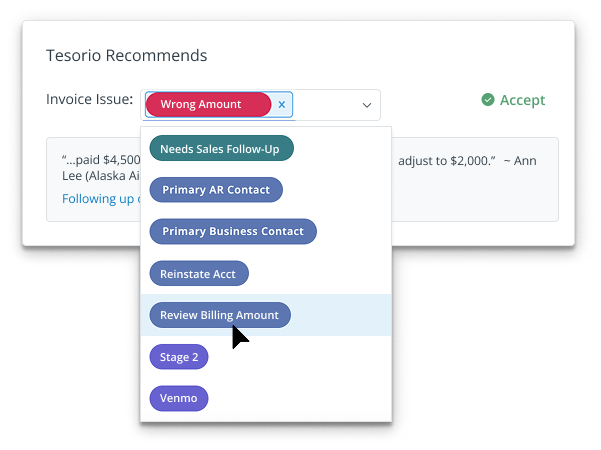

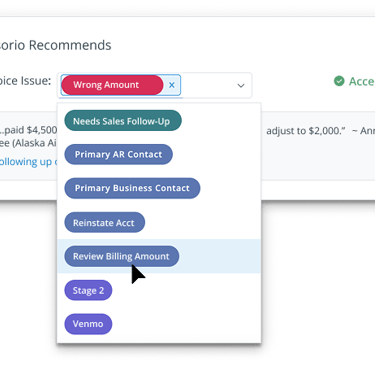

Scaling for multiple issue types

The team had identified a range of common payment issues. The interface and resulting actions needed to support all of them, as well as multiple recommendations for a single invoice.

There was a wealth of common payment issues to capture; before this feature, the first time a collector might know something is wrong is after the invoice has already gone late.

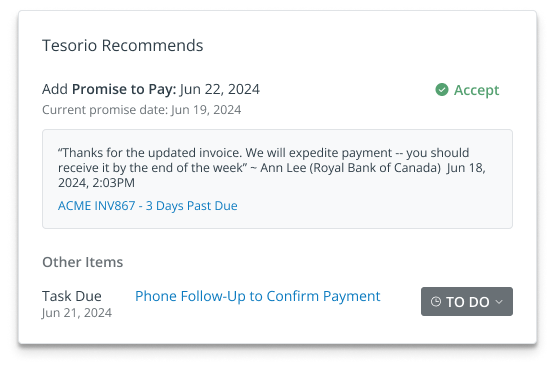

Aggregating all a collector's to-do's

A known challenge in the application revealed in prior research was keeping track of all the different actions that might be needed on an invoice. To discover these, users had to click through multiple tabs on the record.

By aggregating all open items as part of the recommendations feature, all users would benefit, not just those using the AI-based functionality (customers had to opt in since all their email data was being sent to OpenAI).

Recommendations weren't limited to AI-generated content -- it was the first instance of developing a priority list in the application.

The real need for learning

The data scientists and executives at first weren't convinced that the AI needed learning capabilities. Tag-based recommendations proved the point. Users often applied tags to track specific issue types so a set could be resolved in bulk.

Users expected the system to be as good as an intern (or a "warm body" per one collector). In the case of tags, the recommendation agent would suggest a default tag appropriate to the issue type. However, many companies customize their tags to better reflect their own processes. The common expectation was that the first time a user chose a different tag than the default, the system would subsequently remember that preference (just like an intern would).

How many times would a user be willing to change a recommendation to her preferred value before deciding the system is NOT intelligent?

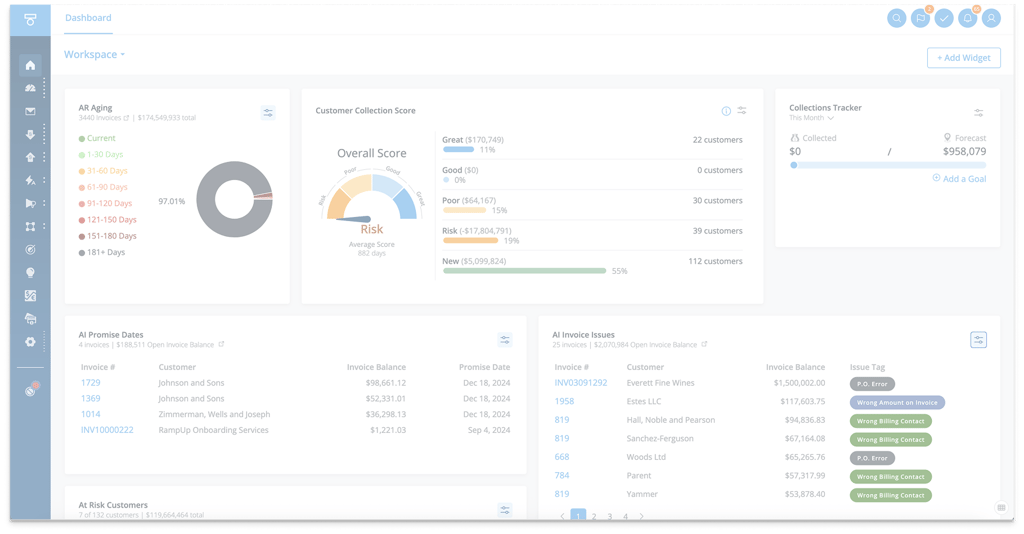

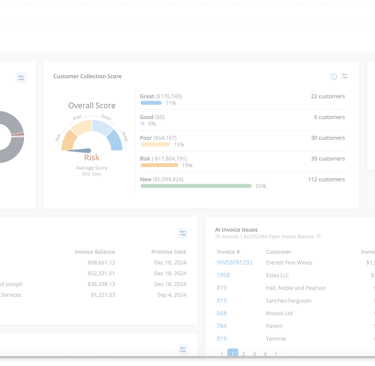

Prioritizing and elevating work

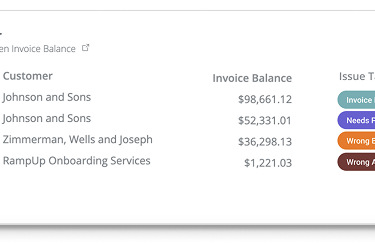

A longstanding mission of mine for all projects was move work up in the system so users have to navigate and interact less. Integrating the parsed issues directly into invoice records minimized back and forth from the Inbox to the rest of the application. But users often had many different, saved views of their invoice records, and had to navigate more deeply in the application to get to those views. I leveraged a library of existing dashboard widgets and designed a customizable table where users could choose which issues to include, which columns to display, and set a preferred sort. These widgets became a precursor to a unified priority list for the application.

Leveraging an existing widget system for the application dashboard, I designed customizable tables so users could see an aggregated set of ALL issues in a single list. These widgets became the precursor to a unified priority list.

Impact and Outcomes

We achieved a 90% acceptance rate of issue recommendations, up from ~80% at initial launch due to our ability to analyze rejections and refine the prompt.

This acceptance rate also led to a 2% decrease in unintentional service suspensions triggered by non-payment.